first commit

This commit is contained in:

commit

f54fbb05aa

21

LICENSE

Normal file

21

LICENSE

Normal file

@ -0,0 +1,21 @@

|

||||

MIT License

|

||||

|

||||

Copyright (c) 2019 Lam1360

|

||||

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||

of this software and associated documentation files (the "Software"), to deal

|

||||

in the Software without restriction, including without limitation the rights

|

||||

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

||||

copies of the Software, and to permit persons to whom the Software is

|

||||

furnished to do so, subject to the following conditions:

|

||||

|

||||

The above copyright notice and this permission notice shall be included in all

|

||||

copies or substantial portions of the Software.

|

||||

|

||||

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

||||

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

||||

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

||||

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

||||

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

||||

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

||||

SOFTWARE.

|

||||

67

README.md

Normal file

67

README.md

Normal file

@ -0,0 +1,67 @@

|

||||

# YOLOv3-model-pruning

|

||||

|

||||

用 YOLOv3 模型在一个开源的人手检测数据集 [oxford hand](http://www.robots.ox.ac.uk/~vgg/data/hands/) 上做人手检测,并在此基础上做模型剪枝。对于该数据集,对 YOLOv3 进行 channel pruning 之后,模型的参数量、模型大小减少 80% ,FLOPs 降低 70%,前向推断的速度可以达到原来的 200%,同时可以保持 mAP 基本不变。

|

||||

|

||||

## 环境

|

||||

|

||||

Python3.6, Pytorch 1.0及以上

|

||||

|

||||

YOLOv3 的实现参考了 eriklindernoren 的 [PyTorch-YOLOv3](https://github.com/eriklindernoren/PyTorch-YOLOv3) ,因此代码的依赖环境也可以参考其 repo

|

||||

|

||||

## 数据集准备

|

||||

|

||||

1. 下载[数据集](http://www.robots.ox.ac.uk/~vgg/data/hands/downloads/hand_dataset.tar.gz),得到压缩文件

|

||||

2. 将压缩文件解压到 data 目录,得到 hand_dataset 文件夹

|

||||

3. 在 data 目录下执行 converter.py,生成 images、labels 文件夹和 train.txt、valid.txt 文件。训练集中一共有 4807 张图

|

||||

片,测试集中一共有 821 张图片

|

||||

|

||||

## 正常训练(Baseline)

|

||||

|

||||

```bash

|

||||

python train.py --model_def config/yolov3-hand.cfg

|

||||

```

|

||||

|

||||

## 剪枝算法介绍

|

||||

|

||||

本代码基于论文 [Learning Efficient Convolutional Networks Through Network Slimming (ICCV 2017)](http://openaccess.thecvf.com/content_iccv_2017/html/Liu_Learning_Efficient_Convolutional_ICCV_2017_paper.html) 进行改进实现的 channel pruning算法,类似的代码实现还有这个 [yolov3-network-slimming](https://github.com/talebolano/yolov3-network-slimming)。原始论文中的算法是针对分类模型的,基于 BN 层的 gamma 系数进行剪枝的。

|

||||

|

||||

### 剪枝算法的大概步骤

|

||||

|

||||

以下只是算法的大概步骤,具体实现过程中还要做 s 参数的尝试或者需要进行迭代式剪枝等。

|

||||

|

||||

1. 进行稀疏化训练

|

||||

|

||||

```bash

|

||||

python train.py --model_def config/yolov3-hand.cfg -sr --s 0.01

|

||||

```

|

||||

|

||||

2. 基于 test_prune.py 文件进行剪枝,得到剪枝后的模型

|

||||

|

||||

3. 对剪枝后的模型进行微调

|

||||

```bash

|

||||

python train.py --model_def config/prune_yolov3-hand.cfg -pre checkpoints/prune_yolov3_ckpt.pth

|

||||

```

|

||||

|

||||

### 剪枝前后的对比

|

||||

|

||||

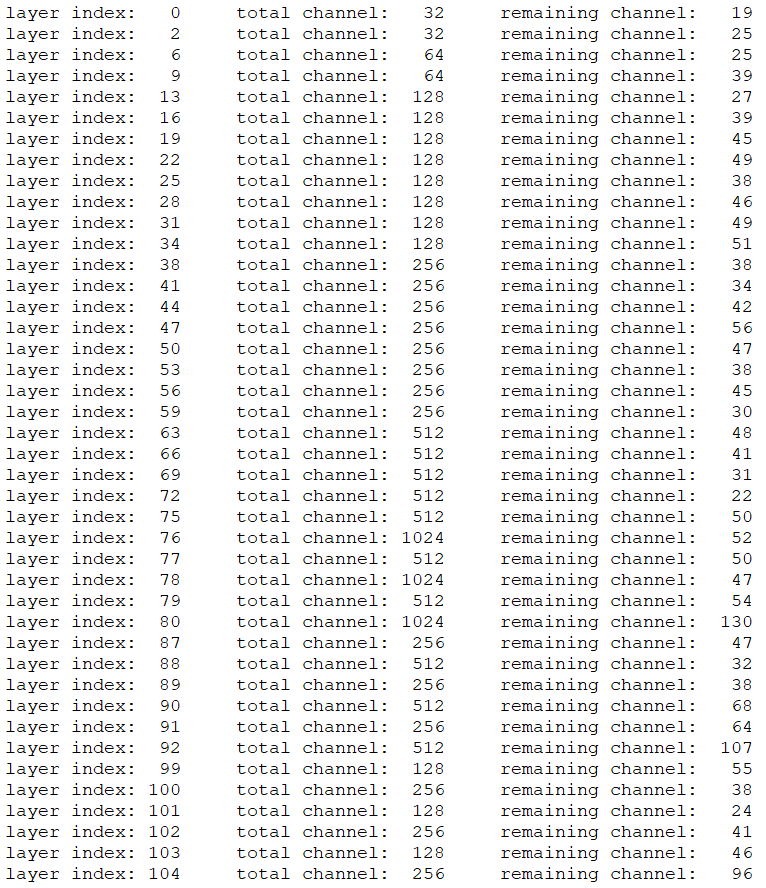

1. 下图为对部分卷积层进行剪枝前后通道数的变化:

|

||||

|

||||

|

||||

> 部分卷积层的通道数大幅度减少

|

||||

|

||||

2. 剪枝前后指标对比:

|

||||

|

||||

| | 参数数量 | 模型体积 |Flops | 前向推断耗时(2070 TI) | mAP |

|

||||

| :------------: | :------:| :-----: | :---: | :-------------------: | :----: |

|

||||

| Baseline (416) | 61.5M | 246.4MB |32.8B | 15.0 ms | 0.7692 |

|

||||

| Prune (416) | 10.9M | 43.6MB | 9.6B | 7.7 ms | 0.7722 |

|

||||

| Finetune (416) | 同上 | 同上 | 同上 | 同上 | 0.7750 |

|

||||

|

||||

> 加入稀疏正则项之后,mAP 反而更高了(在实验过程中发现,其实 mAP上下波动 0.02 是正常现象),因此可以认为稀疏训练得到的 mAP 与正常训练几乎一致。将 prune 后得到的模型进行 finetune 并没有明显的提升,因此剪枝三步可以直接简化成两步。剪枝前后模型的参数量、模型大小降为原来的 1/6 ,FLOPs 降为原来的 1/3,前向推断的速度可以达到原来的 2 倍,同时可以保持 mAP 基本不变。*需要明确的是,上面表格中剪枝的效果是只是针对该数据集的,不一定能保证在其他数据集上也有同样的效果*

|

||||

|

||||

3. 剪枝后模型的测试:

|

||||

|

||||

Prune 模型的权重已放在百度网盘上 ([提取码: gnzx](https://pan.baidu.com/s/13Ycj7JccBHWYF590bgFRxQ)),可以通过执行以下代码进行测试:

|

||||

```bash

|

||||

python test.py --model_def config/prune_yolov3-hand.cfg --weights_path weights/prune_yolov3_ckpt.pth --data_config config/oxfordhand.data --class_path data/oxfordhand.names --conf_thres 0.01

|

||||

```

|

||||

BIN

__pycache__/debug_utils.cpython-38.pyc

Normal file

BIN

__pycache__/debug_utils.cpython-38.pyc

Normal file

Binary file not shown.

BIN

__pycache__/models.cpython-38.pyc

Normal file

BIN

__pycache__/models.cpython-38.pyc

Normal file

Binary file not shown.

BIN

__pycache__/models.cpython-39.pyc

Normal file

BIN

__pycache__/models.cpython-39.pyc

Normal file

Binary file not shown.

759

__pycache__/prune_0.58_yolov3-person.cfg

Normal file

759

__pycache__/prune_0.58_yolov3-person.cfg

Normal file

@ -0,0 +1,759 @@

|

||||

[net]

|

||||

batch=16

|

||||

subdivisions=1

|

||||

width=416

|

||||

height=416

|

||||

channels=3

|

||||

momentum=0.9

|

||||

decay=0.0005

|

||||

angle=0

|

||||

saturation=1.5

|

||||

exposure=1.5

|

||||

hue=.1

|

||||

learning_rate=0.001

|

||||

burn_in=1000

|

||||

max_batches=500200

|

||||

policy=steps

|

||||

steps=400000,450000

|

||||

scales=.1,.1

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=32

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=64

|

||||

size=3

|

||||

stride=2

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=31

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=64

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=128

|

||||

size=3

|

||||

stride=2

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=35

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=128

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=55

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=128

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=2

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=38

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=72

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=79

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=80

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=76

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=85

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=78

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=62

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=2

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=83

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=59

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=33

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=31

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=30

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=21

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=30

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=15

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=1024

|

||||

size=3

|

||||

stride=2

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=73

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=1024

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=35

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=1024

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=14

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=1024

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=71

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=1024

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=226

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

filters=338

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=214

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

filters=337

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=253

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

filters=898

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=0

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

filters=18

|

||||

activation=linear

|

||||

|

||||

[yolo]

|

||||

mask=6,7,8

|

||||

anchors=10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326

|

||||

classes=1

|

||||

num=9

|

||||

jitter=.3

|

||||

ignore_thresh=.7

|

||||

truth_thresh=1

|

||||

random=1

|

||||

|

||||

[route]

|

||||

layers=-4

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[upsample]

|

||||

stride=2

|

||||

|

||||

[route]

|

||||

layers=-1, 61

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=179

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

filters=224

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=141

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

filters=235

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=153

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

filters=432

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=0

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

filters=18

|

||||

activation=linear

|

||||

|

||||

[yolo]

|

||||

mask=3,4,5

|

||||

anchors=10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326

|

||||

classes=1

|

||||

num=9

|

||||

jitter=.3

|

||||

ignore_thresh=.7

|

||||

truth_thresh=1

|

||||

random=1

|

||||

|

||||

[route]

|

||||

layers=-4

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=128

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[upsample]

|

||||

stride=2

|

||||

|

||||

[route]

|

||||

layers=-1, 36

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=96

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

filters=151

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=89

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

filters=159

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=80

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

filters=195

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=0

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

filters=18

|

||||

activation=linear

|

||||

|

||||

[yolo]

|

||||

mask=0,1,2

|

||||

anchors=10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326

|

||||

classes=1

|

||||

num=9

|

||||

jitter=.3

|

||||

ignore_thresh=.7

|

||||

truth_thresh=1

|

||||

random=1

|

||||

|

||||

BIN

__pycache__/prune_0.58_yolov3_ckpt_99_04111754.pth

Normal file

BIN

__pycache__/prune_0.58_yolov3_ckpt_99_04111754.pth

Normal file

Binary file not shown.

BIN

__pycache__/test.cpython-38.pyc

Normal file

BIN

__pycache__/test.cpython-38.pyc

Normal file

Binary file not shown.

BIN

__pycache__/test.cpython-39.pyc

Normal file

BIN

__pycache__/test.cpython-39.pyc

Normal file

Binary file not shown.

759

config/1w_prune_0.6_yolov3-person.cfg

Normal file

759

config/1w_prune_0.6_yolov3-person.cfg

Normal file

@ -0,0 +1,759 @@

|

||||

[net]

|

||||

batch=16

|

||||

subdivisions=1

|

||||

width=416

|

||||

height=416

|

||||

channels=3

|

||||

momentum=0.9

|

||||

decay=0.0005

|

||||

angle=0

|

||||

saturation=1.5

|

||||

exposure=1.5

|

||||

hue=.1

|

||||

learning_rate=0.001

|

||||

burn_in=1000

|

||||

max_batches=500200

|

||||

policy=steps

|

||||

steps=400000,450000

|

||||

scales=.1,.1

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=30

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=64

|

||||

size=3

|

||||

stride=2

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=32

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=64

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=128

|

||||

size=3

|

||||

stride=2

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=54

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=128

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=63

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=128

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=2

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=90

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=107

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=118

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=119

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=113

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=112

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=120

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=113

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=2

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=55

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=112

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=147

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=157

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=149

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=155

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=166

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=164

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=1024

|

||||

size=3

|

||||

stride=2

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=66

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=1024

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=56

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=1024

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=43

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=1024

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=34

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=1024

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=173

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

filters=287

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=139

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

filters=182

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=212

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

filters=207

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=0

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

filters=18

|

||||

activation=linear

|

||||

|

||||

[yolo]

|

||||

mask=6,7,8

|

||||

anchors=10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326

|

||||

classes=1

|

||||

num=9

|

||||

jitter=.3

|

||||

ignore_thresh=.7

|

||||

truth_thresh=1

|

||||

random=1

|

||||

|

||||

[route]

|

||||

layers=-4

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[upsample]

|

||||

stride=2

|

||||

|

||||

[route]

|

||||

layers=-1, 61

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=185

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

filters=249

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=156

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

filters=244

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=188

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

filters=125

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=0

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

filters=18

|

||||

activation=linear

|

||||

|

||||

[yolo]

|

||||

mask=3,4,5

|

||||

anchors=10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326

|

||||

classes=1

|

||||

num=9

|

||||

jitter=.3

|

||||

ignore_thresh=.7

|

||||

truth_thresh=1

|

||||

random=1

|

||||

|

||||

[route]

|

||||

layers=-4

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=128

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[upsample]

|

||||

stride=2

|

||||

|

||||

[route]

|

||||

layers=-1, 36

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=105

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

filters=90

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=91

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

filters=127

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=103

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

filters=113

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=0

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

filters=18

|

||||

activation=linear

|

||||

|

||||

[yolo]

|

||||

mask=0,1,2

|

||||

anchors=10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326

|

||||

classes=1

|

||||

num=9

|

||||

jitter=.3

|

||||

ignore_thresh=.7

|

||||

truth_thresh=1

|

||||

random=1

|

||||

|

||||

759

config/1w_prune_0.7_yolov3-person.cfg

Normal file

759

config/1w_prune_0.7_yolov3-person.cfg

Normal file

@ -0,0 +1,759 @@

|

||||

[net]

|

||||

batch=16

|

||||

subdivisions=1

|

||||

width=416

|

||||

height=416

|

||||

channels=3

|

||||

momentum=0.9

|

||||

decay=0.0005

|

||||

angle=0

|

||||

saturation=1.5

|

||||

exposure=1.5

|

||||

hue=.1

|

||||

learning_rate=0.001

|

||||

burn_in=1000

|

||||

max_batches=500200

|

||||

policy=steps

|

||||

steps=400000,450000

|

||||

scales=.1,.1

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=28

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=64

|

||||

size=3

|

||||

stride=2

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=31

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=64

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=128

|

||||

size=3

|

||||

stride=2

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=50

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=128

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=62

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=128

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=2

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=70

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=98

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=112

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=110

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=109

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=105

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=114

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=105

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=256

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=2

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=38

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=69

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=113

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=114

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=119

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=110

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=127

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||

activation=linear

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=127

|

||||

size=1

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[convolutional]

|

||||

batch_normalize=1

|

||||

filters=512

|

||||

size=3

|

||||

stride=1

|

||||

pad=1

|

||||

activation=leaky

|

||||

|

||||

[shortcut]

|

||||

from=-3

|

||||